Humans are remarkable learners. From the moment we’re born, we continuously absorb information, recognize new objects, and adapt our understanding of the world—without forgetting everything we knew yesterday. If you learn what a smartwatch is today, you don’t suddenly forget what a cuckoo clock is. This ability to learn incrementally throughout our lives is a hallmark of natural intelligence.

For artificial intelligence powering modern robots, this is still a monumental challenge. Standard deep learning models typically train on massive, static datasets and perform exceptionally well on what they’ve seen. But when you try to teach them something new, they often suffer from catastrophic forgetting—new knowledge overwrites and destroys the old. It’s like pouring fresh liquid into a full cup; the original contents spill out.

To build robots that truly learn and operate autonomously, AI must overcome this limitation. Such systems need to learn continually from a stream of experiences, often with only a few examples, identify things they’ve never seen before, and incorporate that new knowledge—all without retraining from scratch.

A recent research paper, “Continual Learning for Autonomous Robots: A Prototype-based Approach”, introduces a breakthrough system called Continually Learning Prototypes (CLP). This algorithm enables robots to learn online, detect new objects, and adapt semi-supervised—all while mitigating forgetting. Let’s explore how CLP works.

The Challenge: Learning in the Wild

Before diving into CLP’s design, it’s essential to understand the hurdles that make lifelong learning so difficult. The researchers define a realistic scenario called Open World Continual Learning (OWCL), which combines several core challenges:

- Online Continual Learning: The robot receives one data sample at a time. It must process and learn from each experience immediately—without storing everything it sees.

- Few-Shot Learning: Robots often encounter new objects only once or twice. They must generalize from very few examples.

- Open-World Assumption: Unlike closed laboratory settings, real-world environments are full of surprises. The robot must recognize when something is genuinely unknown instead of misclassifying it as a known object. This ability is called novelty detection.

- Semi-Supervised Learning: Ideally, the robot should autonomously learn from unlabeled examples and later incorporate true labels when they become available—perhaps through human feedback.

Most continual learning methods struggle with these challenges. Many depend on “rehearsal,” storing old data for retraining, which is memory- and energy-expensive—unfeasible for embedded robots. Others rely on rigid task boundaries, assuming the learning environment is neatly structured. CLP discards these assumptions and builds continual learning from the ground up.

The Core Idea: Learning with Prototypes

At the heart of CLP lies the concept of a prototype. Instead of learning a complex global decision boundary, CLP learns a set of representative points—prototypes—that summarize the clusters of data in feature space.

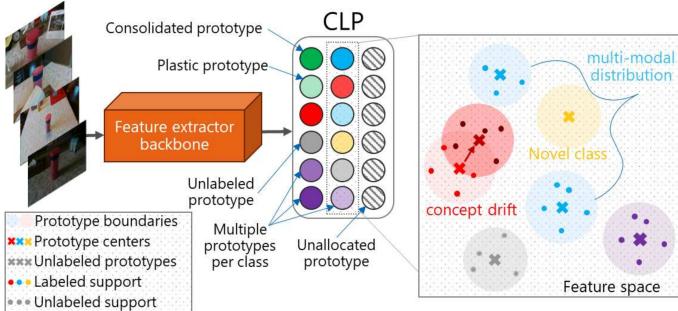

An input image passes through a pre-trained neural network (such as EfficientNet) acting as a feature extractor. It maps the image into a high-dimensional feature space, where similar objects lie close to each other. Each prototype acts as the center of a cluster corresponding to a concept (e.g., “mug,” “keyboard,” “shoe”). When a new image arrives, CLP identifies the prototype most similar to that input and uses its label for prediction.

Figure 1: Overview of CLP. Input features are mapped into prototype space, which captures known, novel, and drifting object categories.

CLP measures similarity efficiently using the dot product of normalized vectors—a proxy for cosine similarity:

\[ s(\boldsymbol{\mu}, \boldsymbol{x}) = \boldsymbol{\mu} \cdot \boldsymbol{x} \]When the model sees a labeled example \((x, \hat{y})\), it finds the most similar prototype \( \mu^* \). Then:

- If the prototype’s label \( y^* \) matches the true label \( \hat{y} \), the center \( \mu^* \) moves closer to the new feature vector \( x \).

- If the labels don’t match, \( \mu^* \) moves away from \( x \).

This simple rule—adaptation by attraction and repulsion—lets CLP learn online, one sample at a time.

The Secret Sauce: Metaplasticity to Beat Forgetting

CLP’s primary innovation is its solution to the plasticity-stability dilemma: the trade-off between learning new things quickly and retaining old knowledge reliably.

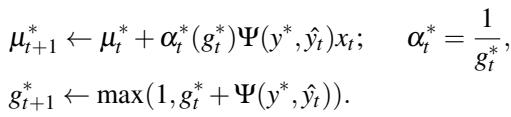

Traditional models use a single, fixed learning rate \( \alpha \). A high learning rate makes learning fast but unstable; a low one preserves old knowledge but slows adaptation. CLP solves this by giving each prototype its own adaptive learning rate, inspired by the biological principle of metaplasticity—where a neuron’s ability to change itself also adapts over time.

Each prototype maintains a goodness score, reflecting its past performance:

- When a prototype predicts correctly, its goodness increases.

- When it errs, the goodness decreases.

The prototype’s learning rate becomes inversely proportional to this score:

\[ \alpha = \frac{1}{g} \]Thus:

- Stable prototypes (high goodness) learn slowly, protecting established knowledge.

- Plastic prototypes (low goodness) adapt rapidly to fix errors or learn new concepts.

Figure 2: CLP’s metaplasticity mechanism dynamically tunes the learning rate per prototype based on performance.

This adaptive mechanism allows CLP to consolidate knowledge locally and automatically. Crucially, it eliminates the need for replay or memory buffers—making it perfect for robots with limited storage and power budgets.

Entering the Open World: Novelty Detection and Unsupervised Learning

Beyond preserving knowledge, robots must recognize novelty—the unknown objects they will inevitably encounter. CLP handles this gracefully through a novelty detection function based on a similarity threshold \( \tau \).

If a new input’s similarity to all existing prototypes falls below \( \tau \), it resides in “open space” and is flagged as novel.

Figure 3: Detecting unknown samples using similarity thresholds.

When novelty is detected, CLP:

- Allocates a new prototype: The novel feature vector becomes the center of a new cluster.

- Assigns a pseudo-label: As the true label is unknown, CLP creates a temporary identifier.

- Learns without supervision: As similar inputs appear, the prototype updates its position, refining its cluster representation autonomously.

Later, a human supervisor or multimodal system can assign labels to each prototype cluster, converting the pseudo-labels into true ones. This transforms CLP’s autonomous clustering into semi-supervised continual learning—learning both with and without supervision, seamlessly over time.

Capturing Complexity: Multi-Modal Representations

Real-world categories rarely form neat, unimodal clusters. The class “chair,” for example, includes armchairs, office chairs, and beanbags—distinct visual appearances scattered across feature space. Most learning methods assume a single prototype per class, failing to capture this diversity.

CLP’s novelty detection naturally allocates multiple prototypes per class when necessary, resulting in rich multi-modal representations. Simple classes may need only one prototype; complex ones may require many. This flexibility improves accuracy and efficiency by modeling real-world variance faithfully.

Figure 4: CLP constructs multi-modal representations dynamically, allocating prototypes as needed per class.

CLP in Action: Experimental Results

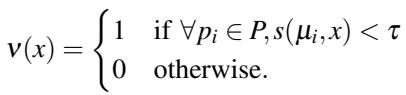

The CLP framework was tested rigorously using the OpenLORIS dataset—a collection of everyday objects recorded in diverse settings like homes, offices, and malls. The dataset includes challenges such as occlusion, lighting variation, and background clutter, perfect for evaluating continual learning in the wild.

Test 1: Supervised Online Learning

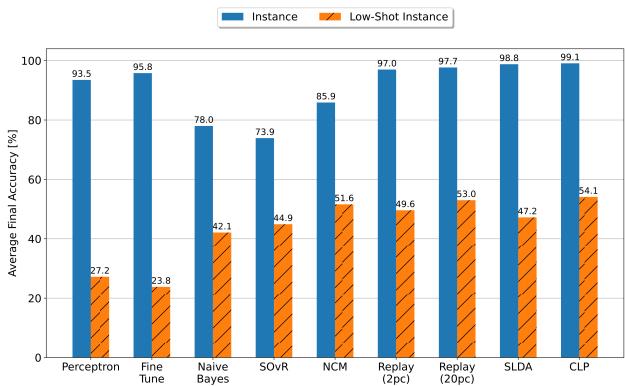

In fully supervised continual learning, CLP was compared against eight existing single-layer methods. It outperformed every alternative, particularly in low-shot scenarios (limited examples per class).

Figure 5: CLP achieves state-of-the-art accuracy in supervised and few-shot online continual learning.

Test 2: Novelty Detection Performance

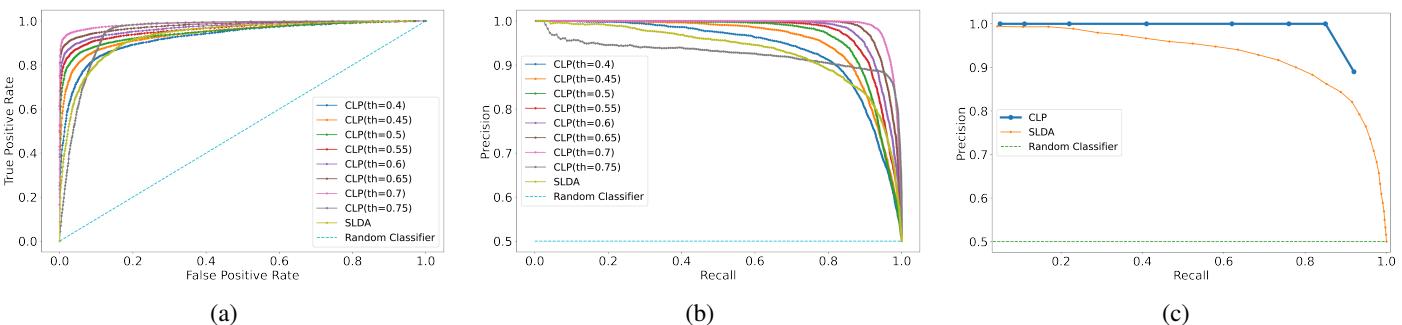

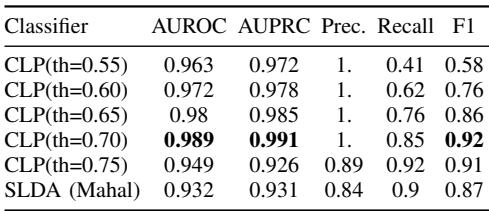

Next, the researchers evaluated CLP’s open-set recognition—distinguishing between known “base” classes and unseen “novel” ones. Across all thresholds tested, CLP demonstrated superior precision and recall compared to the best existing baseline (SLDA).

Figure 6: CLP’s novelty detection far exceeds SLDA in recognizing new classes.

Figure 7: Quantitative comparison of novelty detection accuracy.

CLP achieves perfect precision at several thresholds (1.0), meaning its “novel” classifications are consistently correct—an essential capability for robots encountering unknown objects.

Test 3: The Full Open-World Scenario

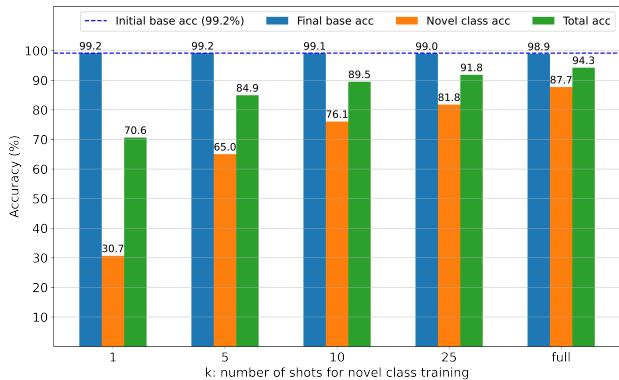

Finally, the researchers simulated a real-world continual learning cycle:

- Phase 1: CLP learns 20 base classes with full supervision.

- Phase 2: It encounters data containing 20 new, unlabeled classes (few-shot). CLP detects and learns these prototypes unsupervised.

- Phase 3: True labels are later assigned, and the system is evaluated across all 40 classes.

Figure 8: CLP retains nearly perfect base-class accuracy while learning new classes without supervision.

Results show:

- No Forgetting: Base class accuracy remains around 99%—catastrophic forgetting eliminated.

- Strong Unsupervised Learning: Novel-class accuracy grows quickly, reaching 76% with only ten short training videos.

This demonstrates that CLP can autonomously detect, cluster, and learn from new experiences while preserving prior knowledge.

Conclusion: A Step Toward Truly Autonomous Learners

The Continually Learning Prototypes (CLP) algorithm marks a major milestone in building adaptable, lifelong learning systems. Its prototype-based framework, coupled with metaplasticity, enables robots to learn continually without replaying past data. With integrated novelty detection and open-world adaptability, CLP learns semi-supervised from the environment—much like living organisms do.

By outperforming previous methods in both supervised and open-world settings, CLP sets a strong benchmark for realistic Open World Continual Learning. Looking ahead, the authors plan to extend CLP to continual object detection and implement it on neuromorphic hardware such as Intel’s Loihi 2 chip—moving toward real-time, ultra-low-power autonomous learning.

With innovations like CLP, the vision of robots that learn and adapt as naturally as humans comes one step closer to reality.

](https://deep-paper.org/en/paper/2404.00418/images/cover.png)