Beyond Wikipedia: Benchmarking Long-Form RAG with LFRQA and RAG-QA Arena

Retrieval-Augmented Generation (RAG) has become the de facto architecture for building reliable AI systems. By connecting Large Language Models (LLMs) to external data sources, we give them a “memory” that is up-to-date and verifiable.

However, a significant gap exists in how we evaluate these systems. Most current benchmarks rely on Wikipedia data and expect short, punchy answers (like “Paris” or “1984”). But in the real world, we use RAG to generate comprehensive reports, summarize financial trends, or explain complex biological mechanisms. When an LLM generates a nuanced, three-paragraph explanation, comparing it to a three-word ground truth using standard “exact match” metrics is like grading a history essay with a math answer key.

In this post, we will deep dive into RAG-QA Arena, a new framework proposed in a recent paper that seeks to solve this evaluation crisis. The researchers introduce LFRQA (Long-form RobustQA), a massive dataset of human-written, multi-document answers, and propose a scalable, model-based evaluation method to benchmark how well RAG systems handle domain-specific challenges.

The Problem: Short Answers in a Long-Form World

To understand why this paper is necessary, we first need to look at the limitations of “Extractive QA.”

For years, the gold standard for Question Answering (QA) datasets involved looking for specific spans of text within a document. If you asked, “Why should a company go public?”, a traditional dataset might highlight a specific sentence in a financial document: “The purpose is to go public but also to generate more wealth.”

This works for search engines, but it fails for Generative AI. Modern LLMs (like GPT-4 or Claude) don’t just extract text; they synthesize it. They combine information from multiple documents into a coherent narrative.

The authors of the paper identify two major issues with existing benchmarks:

- Format Mismatch: Leading LLMs generate long-form responses. Evaluating these against short snippets results in poor overlap scores, even if the LLM’s answer is perfect.

- Domain Adaptation: Most datasets are built on Wikipedia. Real-world applications (finance, law, medicine) use specialized language and logic. A model that excels at answering trivia about movies might fail spectacularly when summarizing a clinical trial.

Introducing LFRQA: A New “Gold Standard”

To address these limitations, the researchers created Long-form RobustQA (LFRQA). This is not just another dataset; it is a fundamental shift in how ground-truth data is constructed for RAG.

LFRQA is built upon an existing dataset called ROBUSTQA, which covered seven distinct domains (including Finance, Technology, and Biomedicine). However, ROBUSTQA suffered from the “short answer” problem. It provided lists of fragmented sentences extracted from documents.

The researchers took these fragmented answers and tasked human annotators with a difficult job: Synthesis.

Annotators were shown a query and multiple relevant documents containing highlighted snippets of information. They were instructed to combine these disparate pieces of information into a single, fluid, and coherent paragraph.

Comparing the Formats

Let’s look at the difference between the old way (ROBUSTQA) and the new way (LFRQA).

![Figure 1: LFRQA annotation example. There are three documents relevant to the query. We instruct annotators to combine RoBUSTQA’s answers into a coherent long-form answer with added text if necessary. Citations [1], [2] and [3] indicate the supporting documents of each sentence.](/en/paper/2407.13998/images/001.jpg#center)

As shown in Figure 1, the input documents contain scattered information.

- Document 1 mentions generating wealth for employees.

- Document 2 mentions raising money and selling shares.

- Document 3 reiterates that the reason is “to get money.”

A traditional system might just return one of these sentences. The LFRQA Long-form Answer (at the bottom of Figure 1) combines them: “A company goes public to raise money because the shares can be easily bought and sold… Also, it is a means to generate more wealth among employees…”

This mirrors how we actually want RAG systems to behave: reading multiple sources and synthesizing a complete answer.

Why LFRQA is Harder (and Better)

The LFRQA dataset is unique because it forces models to perform Multi-Document Reasoning. It is rarely enough to find a single “needle in a haystack.”

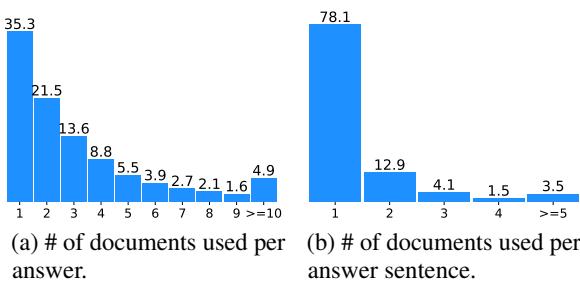

Figure 3 illustrates the complexity of the dataset.

- Chart (a) shows that roughly 65% of the answers in LFRQA require information from two or more documents.

- Chart (b) shows that even individual sentences within an answer often combine facts from multiple sources.

This distribution confirms that LFRQA is a true test of a RAG system’s ability to aggregate information, rather than just retrieve the “best” paragraph and copy-paste it.

The Core Method: RAG-QA Arena

Having a great dataset is only half the battle. How do you grade the model’s output?

Hiring human experts to read thousands of long-form answers is prohibitively expensive and slow. To solve this, the authors propose RAG-QA ARENA, a scalable evaluation framework that uses “LLM-as-a-Judge.”

The concept is inspired by the famous Chatbot Arena, where models fight head-to-head. In RAG-QA Arena, the “judge” (a powerful LLM like GPT-4) compares a candidate model’s answer directly against the high-quality human reference from LFRQA.

The Evaluation Pipeline

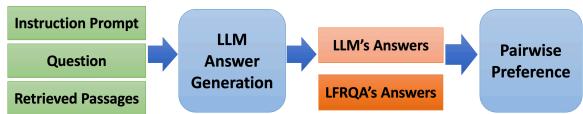

The framework operates in a streamlined flow, visualized below:

Here is the step-by-step process shown in Figure 4:

- Retrieval: The system takes a Question and retrieves relevant passages using a retriever (in this paper, they use ColBERTv2).

- Generation: The candidate LLM (e.g., Llama-3, Mixtral) reads the retrieved passages and generates an answer.

- Comparison: This is the critical step. The Pairwise Preference block receives two inputs:

- The Candidate LLM’s Answer.

- The Ground Truth LFRQA Answer (Human-written).

- Judgment: An Evaluator LLM (GPT-4) decides which answer is better based on Helpfulness, Truthfulness, and Completeness.

Why Compare Against LFRQA?

You might ask: Why compare the model’s answer to the LFRQA answer? Why not just check if the answer is supported by the documents?

The authors argue that the LFRQA answers are effectively “Gold Standard Summaries.” Because they were written by humans to be comprehensive and coherent, they serve as a stable anchor. If a model’s answer is preferred over the human-written LFRQA answer, it implies the model has achieved a very high level of performance.

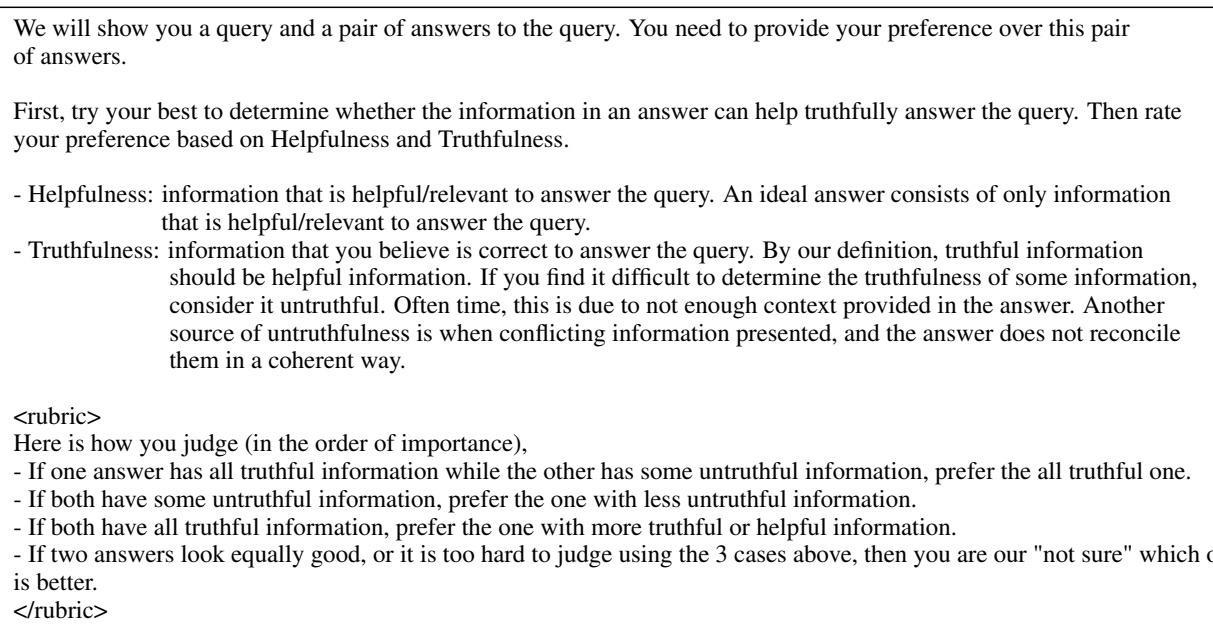

The evaluation rubric used by the judge is rigorous. It prioritizes Truthfulness above all else. If an answer sounds good but contains hallucinations (untruthful info), it loses.

As seen in the instruction set (Table 13), the judge is explicitly told: “If one answer has all truthful information while the other has some untruthful information, prefer the all truthful one.” This prevents models from winning simply by being verbose or polite.

Experiments and Results

The researchers tested a wide variety of state-of-the-art models, including GPT-4o, GPT-4-Turbo, Llama-3 (70B & 8B), Mixtral, and Qwen.

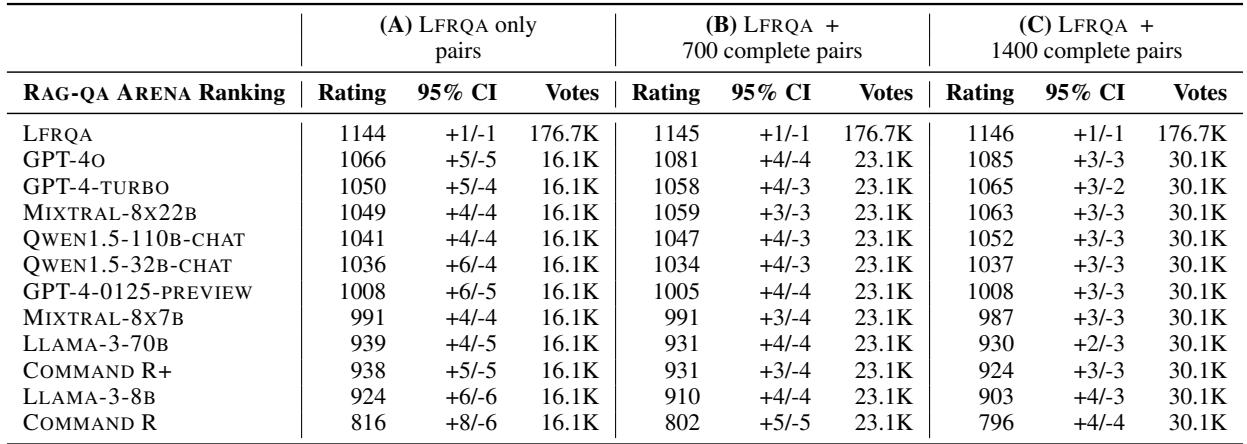

The Leaderboard

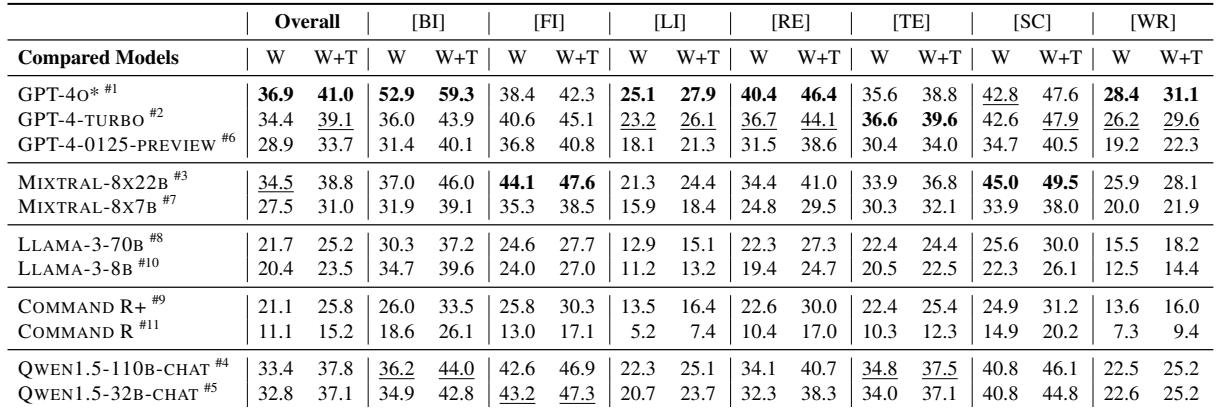

The results reveal that LFRQA is an incredibly difficult benchmark. Even the most powerful models struggle to consistently beat the human-written references.

Table 3 displays the “Win Rate” (W) and “Win + Tie Rate” (W+T) of various models against the LFRQA human answers.

- GPT-4o (Row 1): The strongest performer, but it only achieves a 36.9% Win Rate overall. This means that in the majority of cases, the human-written answer was still considered superior or equal to GPT-4o.

- Domain Variation: Performance varies wildy by domain.

- In Finance [FI], Mixtral-8x22B actually outperforms GPT-4o (44.1% vs 38.4%).

- In Biomedical [BI], GPT-4o dominates with a 52.9% win rate.

- Model Size Matters: There is a steep drop-off for smaller models. Look at Command R (Row 9); it only manages an 11.1% win rate, highlighting that long-form synthesis requires the reasoning capabilities of larger parameter models.

Elo Ratings and Ranking stability

To ensure these rankings were robust, the authors converted these pairwise comparisons into Elo ratings (the same system used in Chess and video games).

Table 5 confirms the hierarchy. LFRQA (the human baseline) sits at the top with an Elo of 1145. GPT-4o is the closest challenger at 1081.

The authors also performed an interesting validation check (Columns B and C). They added additional pairwise comparisons between the models themselves (not just against humans) to see if the ranking changed. The rankings remained stable, suggesting that comparing models only against LFRQA is a computationally efficient proxy for full pairwise tournaments.

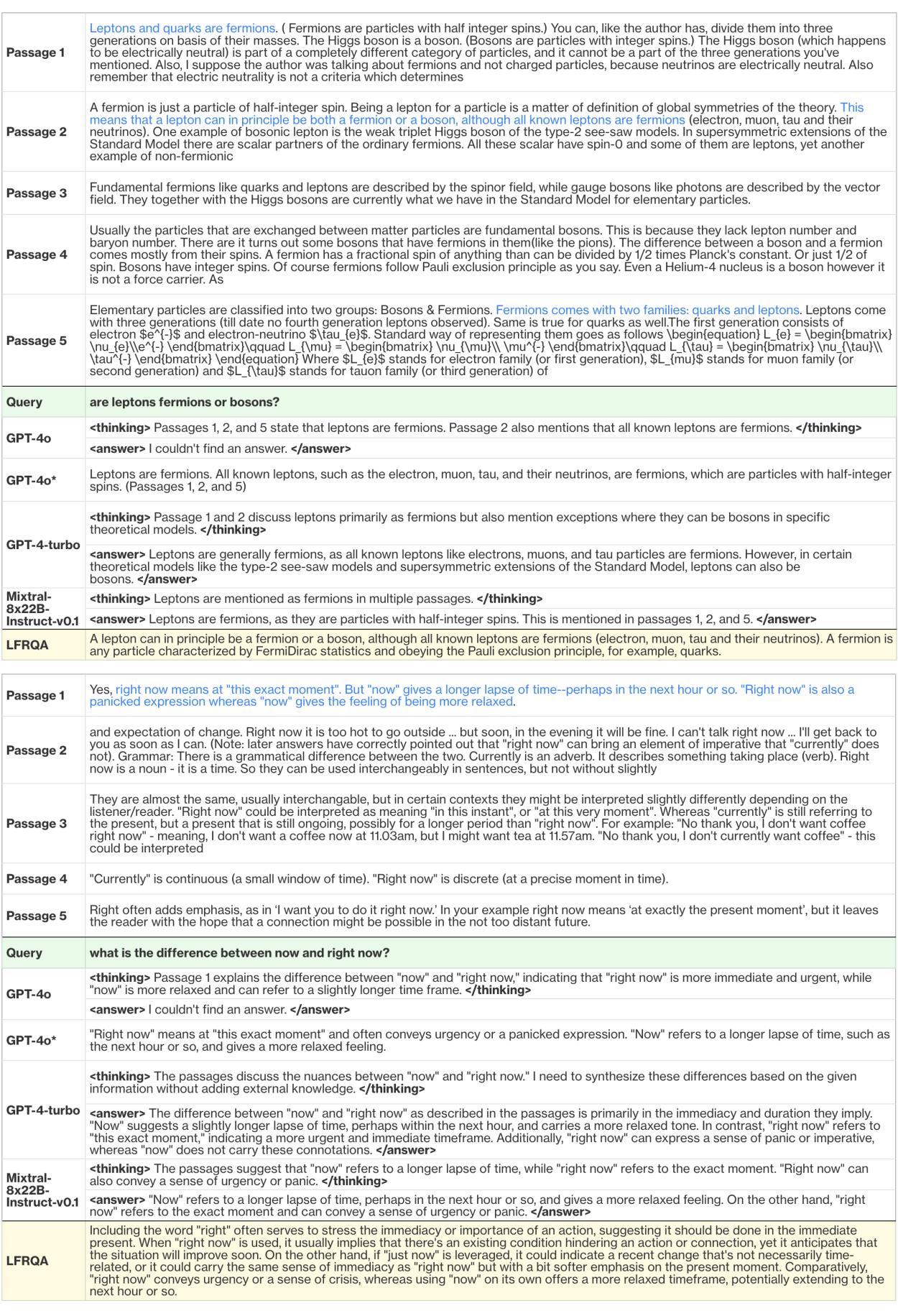

The “Over-Refusal” Phenomenon

One of the most fascinating findings in the paper was a behavioral quirk in GPT-4o.

RAG systems rely on retrieved documents. sometimes those documents are irrelevant. A good RAG system should say, “I couldn’t find an answer” rather than hallucinating.

However, the researchers found that GPT-4o was rejecting questions at an alarming rate—48.3% of the time it claimed it couldn’t find an answer, even when the answer was in the text.

Figure 9 shows a clear example of this failure mode.

- The Query: “What is the difference between now and right now?”

- The Passages: Clearly explain the nuance (immediate vs. relaxed timeframe).

- GPT-4o (Original): It successfully identifies the difference in its internal “thinking” process (see the

<thinking>tag), but then abruptly concludes: “I couldn’t find an answer.” - The Fix (GPT-4o):* By removing the “Chain of Thought” (CoT) instruction from the prompt, the model became less conservative and correctly answered the question.

This highlights a critical lesson for RAG developers: Prompt Engineering matters. The safety guardrails of advanced models like GPT-4o can sometimes be too aggressive for RAG tasks, leading to false negatives where the model refuses to answer valid questions.

Conclusion and Implications

The “RAG-QA Arena” paper makes a compelling case that we need to graduate from extractive, Wikipedia-based benchmarks. As we deploy LLMs into specialized fields like law, finance, and science, we need evaluation datasets that mirror that complexity.

Key Takeaways for Students and Practitioners:

- Context Length != Comprehension: Just because a model can read 100k tokens doesn’t mean it can synthesize them well. Synthesis is a reasoning task, not just a retrieval task.

- Synthesis is the Frontier: The gap between retrieving documents and writing a coherent report is where current models struggle. LFRQA exposes this gap.

- Human-in-the-loop Data: High-quality evaluation requires high-quality data. The effort to create LFRQA (human synthesis of snippets) provides a much stronger signal than automated scraping.

- Evaluation is Hard: The “I couldn’t find an answer” error shows that metrics aren’t just about accuracy; they are also about the model’s willingness to answer and its calibration of uncertainty.

By using frameworks like RAG-QA Arena, researchers can now push the boundaries of RAG systems, ensuring they are robust enough for the messy, multi-document reality of the real world.

](https://deep-paper.org/en/paper/2407.13998/images/cover.png)