Negotiation is one of the most stressful yet essential skills in modern life. Whether you are buying a car, discussing a salary, or settling a rent price, the ability to advocate for yourself directly impacts your financial well-being.

Unfortunately, effective negotiation is rarely taught in schools. It is a “reflexive behavioral habit” usually refined only through expensive MBA seminars involving role-playing and expert coaching. This exclusivity creates a gap: populations that could benefit most from these skills—such as women and minorities, who are statistically often less accustomed to self-advocacy—lack access to high-quality training.

This brings us to a fascinating question: Can Large Language Models (LLMs) democratize this exclusive coaching?

In this post, we are deep-diving into ACE (Assistant for Coaching nEgotiation), a novel system developed by researchers at Columbia University. ACE doesn’t just chat with you; it watches you negotiate, identifies specific tactical errors based on MBA-level criteria, and teaches you how to do better next time.

The Problem with Current AI Tutors

The recent boom in LLMs (like GPT-4) has led to a flood of “AI Tutors.” However, most of these systems have a flaw when it comes to soft skills. They act as conversation partners, but they rarely provide the structured, critical feedback a human professor would.

Practicing negotiation by simply chatting with an AI is like practicing tennis against a wall. You get to hit the ball, but the wall won’t tell you your grip is wrong or your stance is weak. To actually learn, you need a coach who spots your mistakes and explains why they are mistakes.

ACE attempts to solve this by combining a conversational agent with a rigorous, expert-defined Annotation Scheme.

Step 1: Defining “Good” Negotiation

Before an AI can teach, it must know what to look for. The researchers didn’t rely on generic internet conversations. Instead, they collaborated with an instructor of an MBA negotiation course.

They collected a dataset of 40 high-quality negotiation transcripts between MBA students. These weren’t random crowd-workers; these were students trained in bargaining tactics, negotiating over realistic scenarios like buying a used Honda Accord.

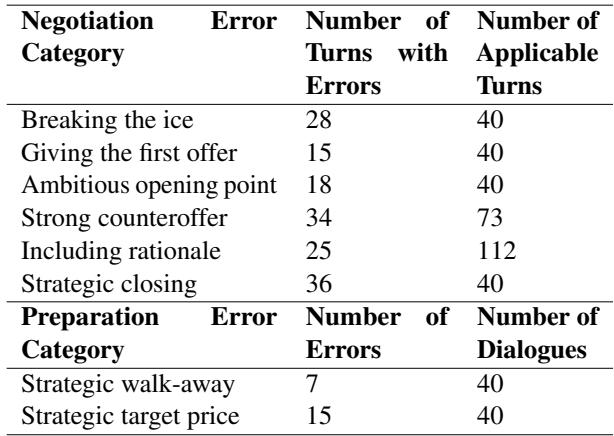

As seen in Table 1, the dataset captures the complexity of real deals, with varied deal percentages and amounts. Using this data and expert consultations, the researchers developed an Annotation Scheme—a rubric for scoring negotiation performance.

The 8 Errors of Negotiation

The core intelligence of ACE lies in its ability to detect eight specific types of errors. These are divided into Preparation Errors (before the chat starts) and Negotiation Errors (during the chat).

Preparation Errors:

- Strategic Walk-away Point: Did the user calculate the absolute maximum they should pay based on their budget and alternatives?

- Strategic Target Price: Did the user set an optimistic but realistic goal? (Too high, and you leave money on the table; too low, and you’re unrealistic).

Negotiation Errors: 3. Breaking the Ice: Did the user start with social rapport building? 4. Giving the First Offer: Did the user anchor the negotiation by offering a price first? 5. Ambitious Opening Point: Was the first offer aggressive enough? 6. Strong Counteroffer: When the opponent made an offer, did the user counter effectively? 7. Including Rationale: Did the user explain why they offered a specific price? 8. Strategic Closing: Did the user close the deal professionally without gloating?

Table 3 shows the prevalence of these errors in the training data. Notably, “Strategic Closing” and “Strong Counteroffer” were frequent stumbling blocks, highlighting exactly where students need the most help.

Step 2: The ACE System Architecture

Now that we understand the criteria, let’s look at how ACE actually works. The system is designed as a pipeline that guides the learner through a complete educational loop.

1. The Negotiation Agent

First, the user negotiates with a chatbot powered by GPT-4. However, a standard GPT-4 model is often too “nice”—it tends to be agreeable and caves in easily.

To fix this, ACE uses Dynamic Prompting. The agent is given a “subjective limit” that is stricter than its actual walk-away price. As the negotiation progresses, this limit is slowly relaxed. This forces the user to work harder for the deal, simulating a tough, human bargainer rather than a pushover bot.

2. The Feedback Loop

This is where ACE shines. Once the negotiation is over (or during turns), the system analyzes the transcript to generate feedback.

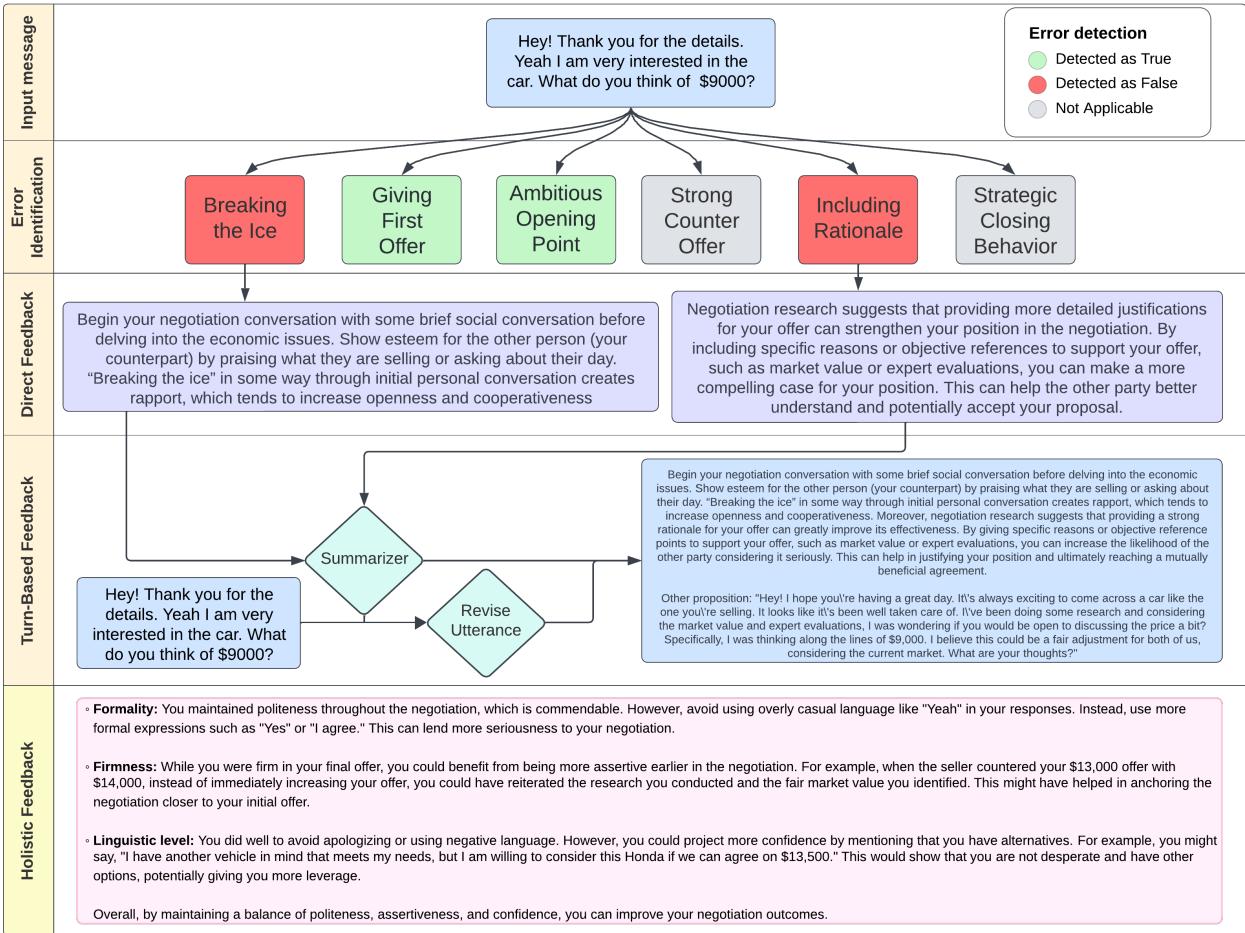

As illustrated in Figure 1, the feedback pipeline is sophisticated. It doesn’t just guess; it uses specific logic paths:

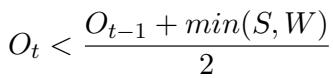

A. Identifying Mathematical Errors Negotiation is partly math. ACE extracts price offers from the text and checks them against optimal formulas. For example, to check if a user made a Strong Counteroffer (\(O_t\)), ACE uses this formula:

In plain English: Your counteroffer (\(O_t\)) should be lower than the midpoint between your previous offer (\(O_{t-1}\)) and the lower of the seller’s offer (\(S\)) or your walk-away point (\(W\)). If you offer more than that, you are conceding too much ground too quickly.

Similarly, for an Ambitious Opening Point, the system checks if the opening offer (\(O_1\)) creates a favorable anchor relative to the target (\(T\)):

![]\n{ \\frac { S + O _ { 1 } } { 2 } } \\leq T\n()](/en/paper/2410.01555/images/005.jpg#center)

This formula ensures that the midpoint between the seller’s price (\(S\)) and your opening offer (\(O_1\)) is still within your target range (\(T\)). If not, you’ve started the negotiation in a losing position.

B. Identifying Linguistic Errors For soft skills like “Including Rationale” or “Breaking the Ice,” formulas don’t work. Here, ACE uses LLM-based classifiers. It feeds the user’s text into a model prompt designed to detect these specific behaviors.

C. Generating Feedback Once an error is found, ACE generates two things:

- Direct Feedback: An explanation of the mistake (e.g., “Your counteroffer was too high because…”).

- Utterance Revision: The system rewrites the user’s message to show what a better negotiator would have said.

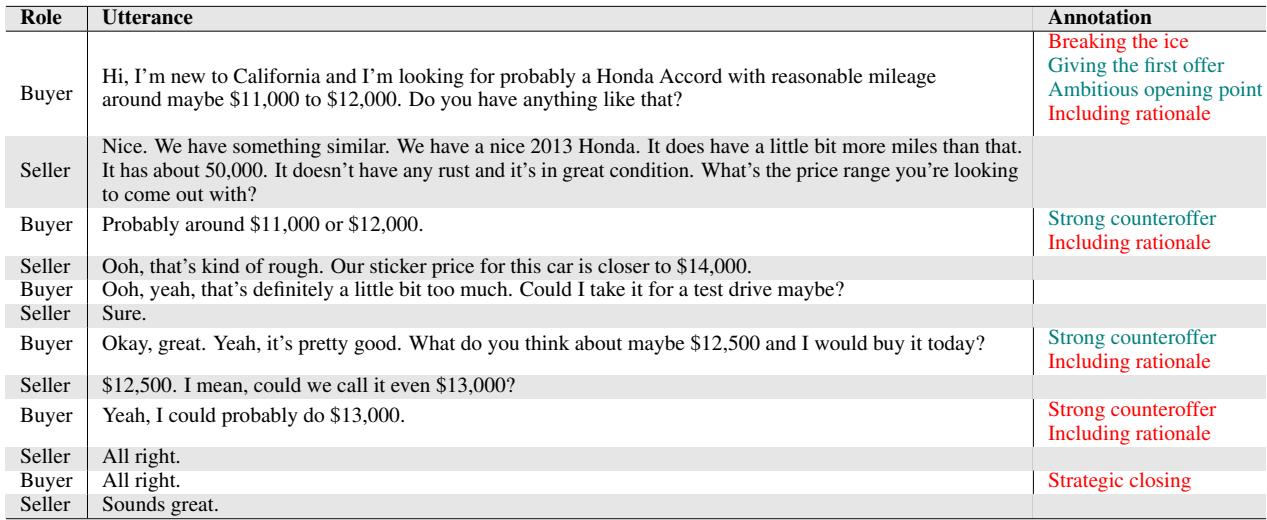

Below is an example of what the system sees versus how it annotates the dialogue.

In Table 2, you can see the system flagging specific turns. For instance, when the buyer says “Probably around \(11,000 or \)12,000,” the system might flag this for lacking a strong rationale if the buyer didn’t justify why those numbers make sense.

Step 3: Does ACE Actually Work?

The researchers didn’t just build the system; they rigorously tested it. The evaluation had two phases: checking the AI’s accuracy and checking the students’ learning.

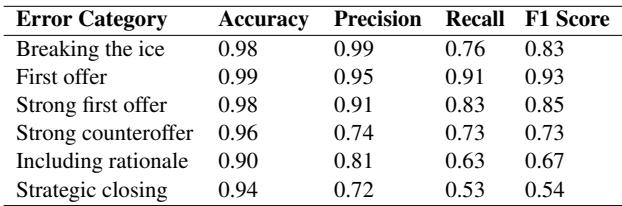

Phase 1: Error Identification Accuracy

First, can ACE correctly spot mistakes? The researchers compared ACE’s judgments against human expert labels.

Table 4 shows that ACE has very high Accuracy (over 90% for all categories). However, the F1 Scores (which balance precision and recall) vary. It is excellent at spotting factual things like “First Offer” (0.93 F1), but struggles slightly more with nuanced concepts like “Strategic Closing” (0.54 F1). Even with these minor limitations, the system is reliable enough to provide consistent coaching.

Phase 2: Human Learning Outcomes

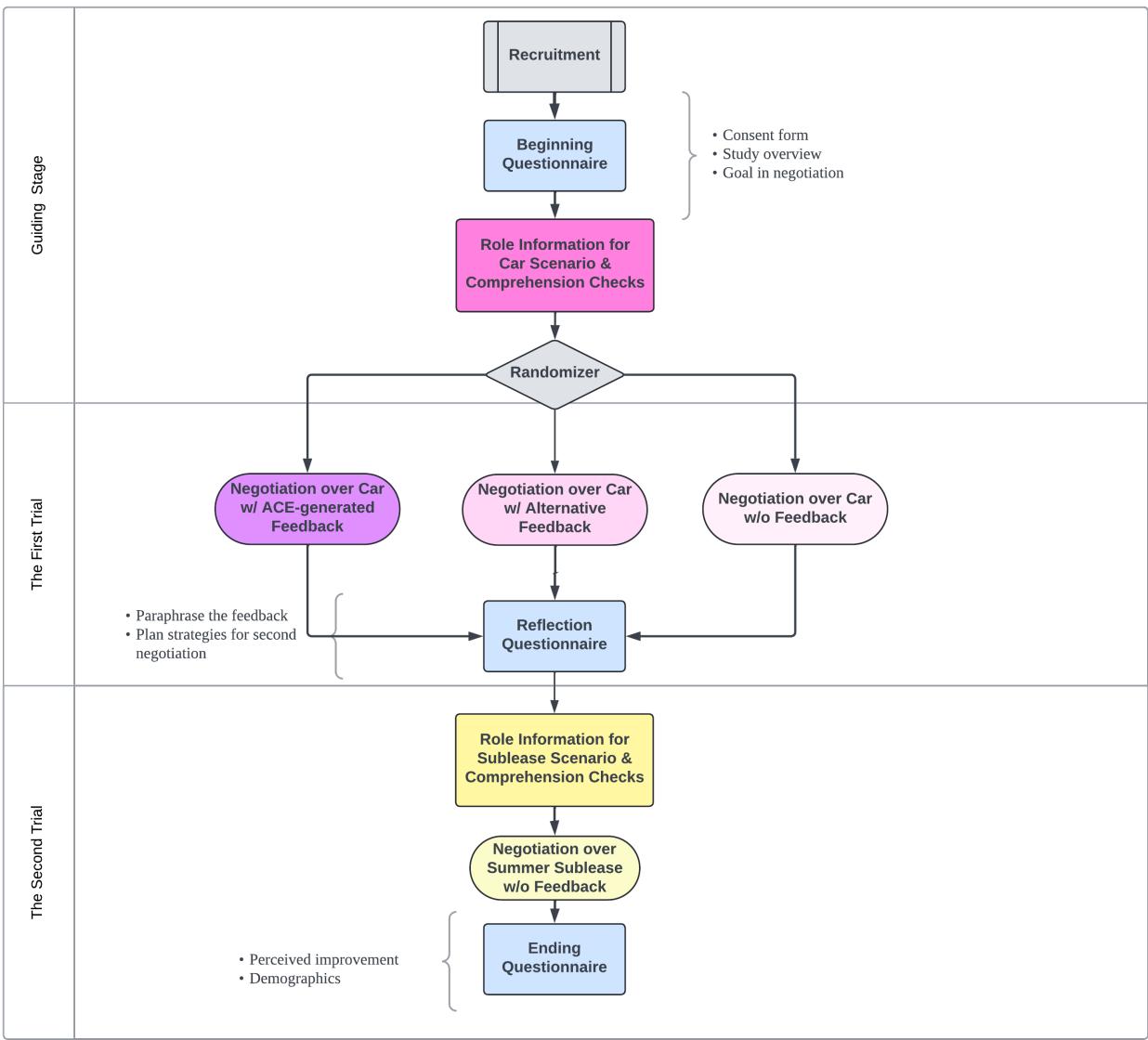

To see if ACE actually helps people become better negotiators, the researchers ran a user study with 374 participants.

The Experiment Setup: Participants were split into three groups:

- ACE Condition: Negotiated with the bot and received detailed ACE feedback.

- Other-Feedback Condition: Received generic feedback generated by GPT-4 (a method used in previous studies for AI-to-AI negotiation).

- No-Feedback Condition: Negotiated with the bot but got no coaching.

The participants performed two negotiations. First, a “Used Car” scenario (training), and then a “Summer Sublease” scenario (testing). The goal was to see if skills learned in the car negotiation transferred to the sublease negotiation.

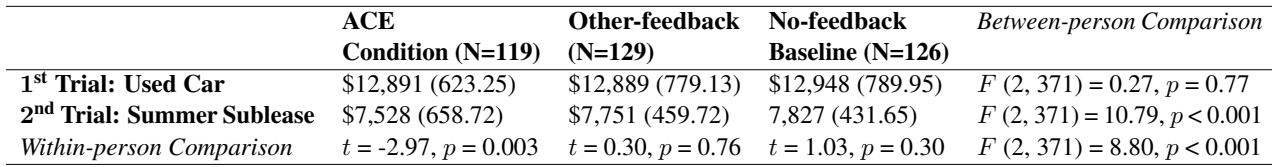

The Results: The results were compelling. The primary metric for success was the final deal price—lower is better for the buyer.

Table 5 tells the story clearly:

- ACE Group: In the second trial (Summer Sublease), they negotiated a significantly lower price ($7,528) compared to their first trial.

- Other/No-Feedback Groups: Their performance barely changed or got worse (paying \(7,751 and \)7,827 respectively).

This is a critical finding. Simply practicing (No-Feedback) didn’t help. Even receiving generic AI suggestions (Other-Feedback) didn’t help. Only the specific, formula-backed, expert-modeled feedback from ACE led to real improvement.

Furthermore, participants in the ACE condition felt more confident. They rated their perceived improvement higher than the other groups.

Conclusion: The Future of AI Coaching

The ACE system represents a significant step forward in educational technology. It moves beyond the idea of AI as a simple chatbot and positions it as a structured pedagogical tool.

By modeling the specific feedback strategies of MBA professors—identifying mathematical limits, demanding rationales, and critiquing closing strategies—ACE proved that soft skills can be taught effectively by machines.

The implications extend far beyond buying used cars. Systems like ACE could provide scalable, high-quality training for salary negotiations, business deals, and conflict resolution, making these critical life skills accessible to everyone, regardless of their background or access to expensive education.

Negotiation is an art, but as this paper shows, there is a science to teaching it—and AI is beginning to master it.

](https://deep-paper.org/en/paper/2410.01555/images/cover.png)