Large Language Models (LLMs) like ChatGPT and GitHub Copilot have exploded into the software development world, promising nothing short of a revolution. We’ve all heard the stories—developers completing complex tasks in minutes instead of hours, AI pair programmers that never tire, and teams that seem to operate faster than ever. The excitement has been palpable. Companies are racing to integrate these tools, and developers are rewriting their workflows, often replacing a deep dive into documentation or Stack Overflow with a quick query to an AI assistant.

But as the hype fades, a more complex picture emerges. Is this new pillar of software development a sturdy foundation—or a shaky crutch? Does using LLMs genuinely improve productivity, or are we slowly trading our skills for convenience? For every story of a developer saving hours, there’s another whisper of lost coding intuition, subtle bugs, and the erosion of critical thinking.

This is the tension today’s software practitioners face: a delicate balancing act. A recent research paper, “Walking the Tightrope of LLMs for Software Development: A Practitioners’ Perspective” by Ferino, Hoda, Grundy, and Treude, dives deep into this issue. Instead of benchmarks or metrics, the researchers conducted detailed interviews with 22 software practitioners to uncover how LLMs truly reshape daily work, collaboration, and even personality. Their findings reveal the benefits, the drawbacks, and crucially—the strategies that help developers walk this tightrope without falling.

This blog unpacks their insights and explores how developers can harness LLMs strategically—moving forward with innovation while preserving the craftsmanship and critical thinking that make great software possible.

Setting the Scene: From Hype to Reality

The researchers began with three deceptively simple questions:

- RQ1. How do LLMs take software developers forward? — What are the tangible benefits?

- RQ2. How do LLMs hold developers back? — What are the hidden costs and challenges?

- RQ3. How can developers achieve a balanced use of LLMs? — How can we manage this trade-off well?

To answer them, the team turned to the “trenches”—to practitioners who use LLM-based tools daily—and listened closely.

The Method: Listening to Developers in the Trenches

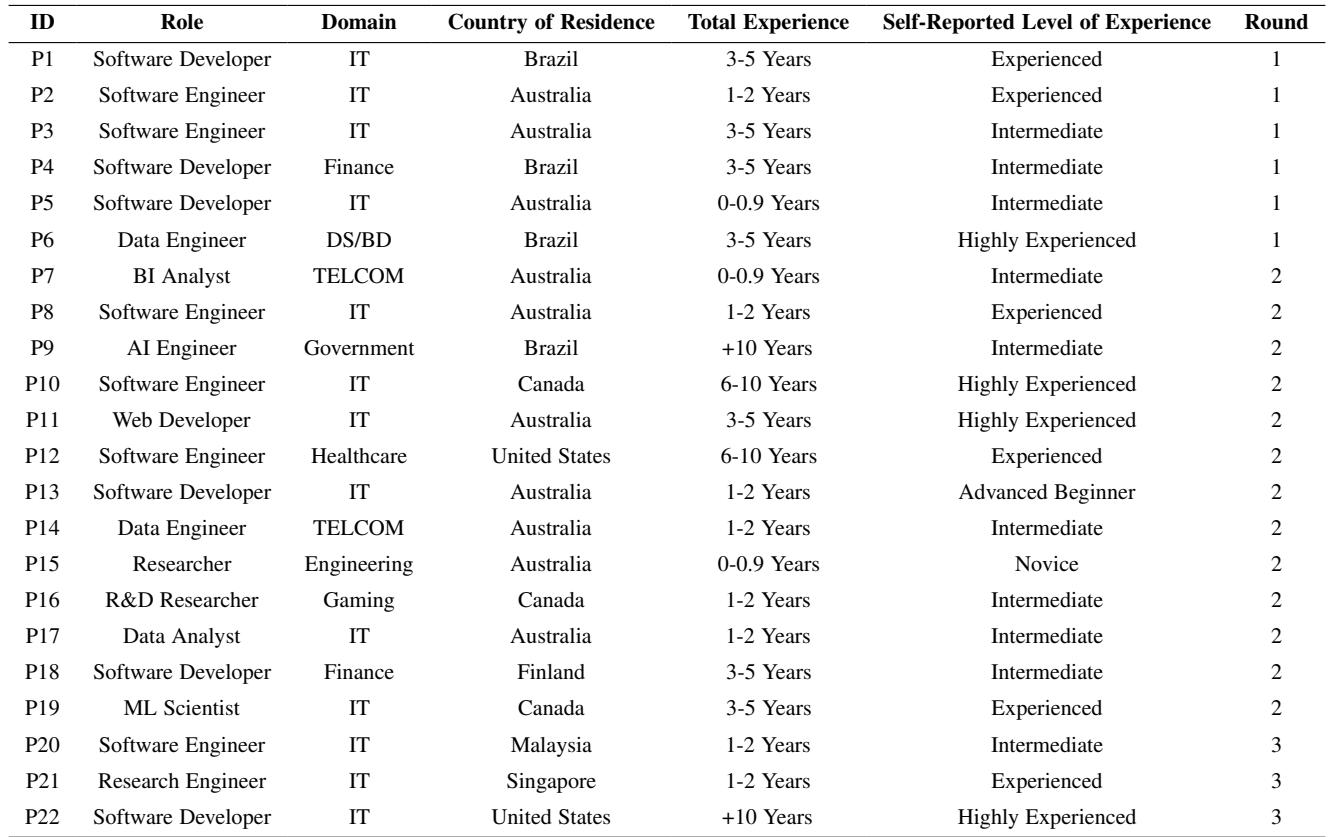

The strength of this study lies in its depth. The researchers conducted 22 semi-structured interviews with practitioners from around the world, spanning roles from software engineers and data scientists to BI analysts and research developers.

Participant demographics from the study — developers across five continents and varied levels of industry experience.

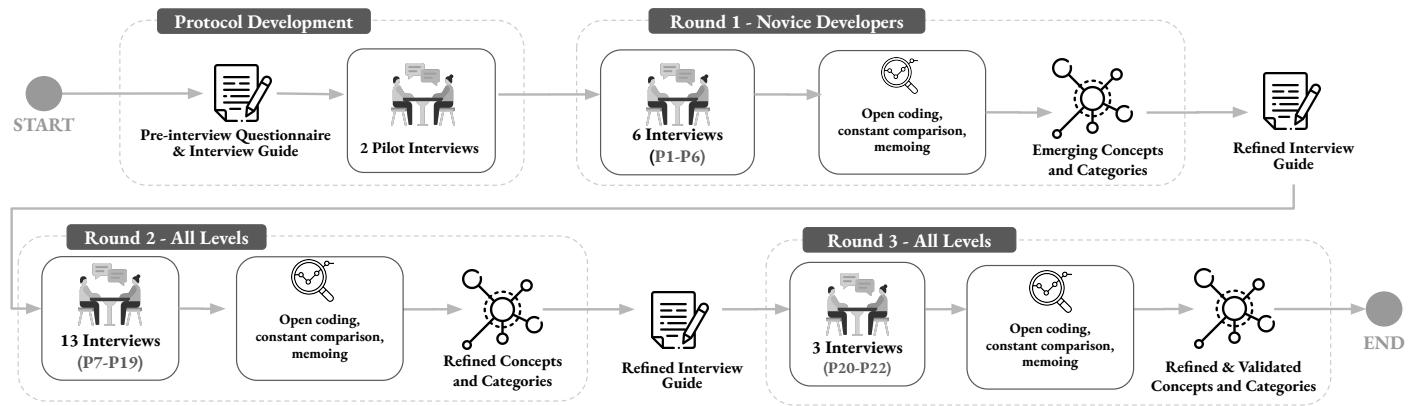

To analyze rich qualitative data like this, they applied Socio-Technical Grounded Theory (STGT)—a rigorous qualitative method that considers both human and technical dimensions. In practice, that means building theory directly from what participants say, rather than from preconceived ideas. STGT is especially well-suited to studying modern software engineering, where emotional, social, and technical factors intertwine.

Their investigation unfolded in three iterative rounds, each refining concepts and categories as insights emerged.

The study’s three-round methodology — iterative cycles of interviews and analysis built stronger insights over time.

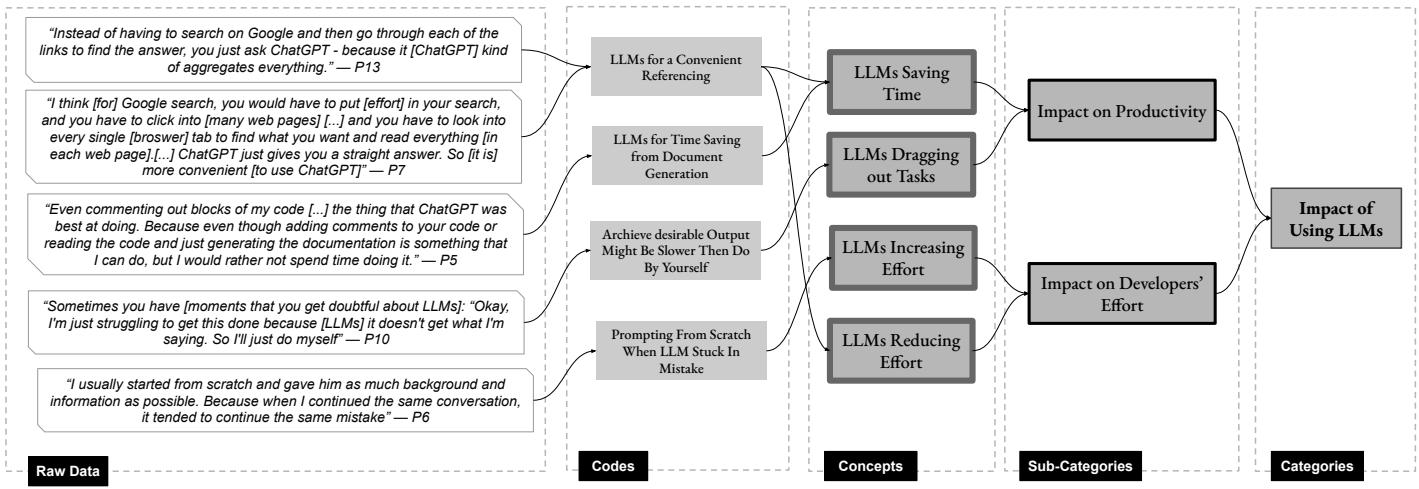

From the interview transcripts, the researchers systematically moved from raw quotes to codes, to concepts, and then to higher-level categories.

The STGT analysis process — how raw developer experiences evolved into structured categories of impact.

Now, let’s explore what they found.

The Upside: How LLMs Move Developers Forward

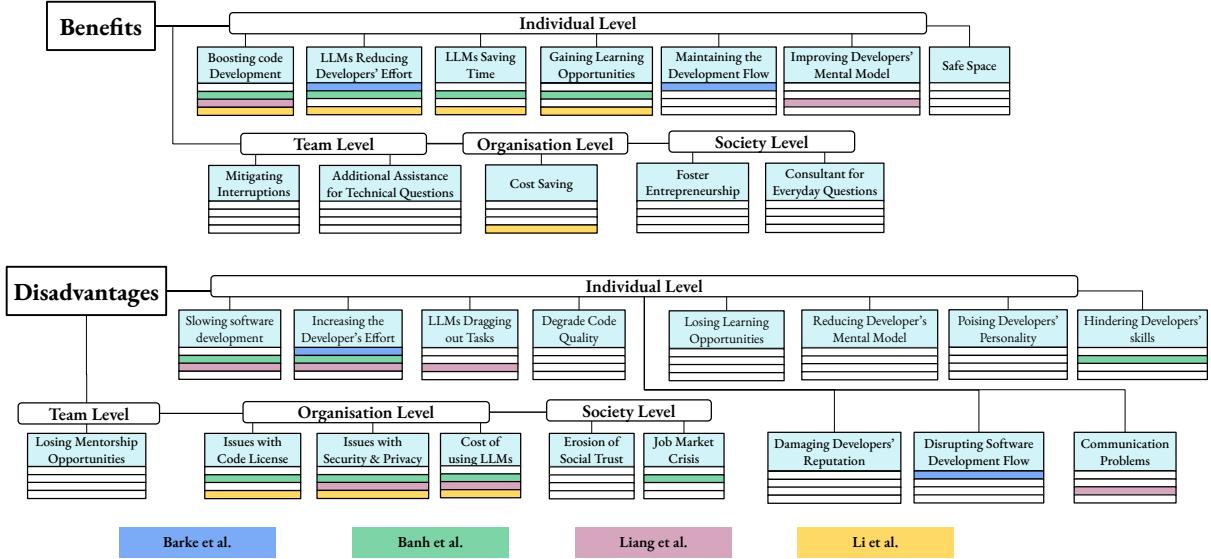

The benefits of LLMs manifested across four distinct levels—individual, team, organization, and society.

Individual Level

This is where the most immediate and visible impacts appear.

Boosting Code Development and Saving Time: Most participants reported dramatic acceleration in their workflow. By automating repetitive or boilerplate tasks, LLMs let developers focus on complex problem-solving. One developer put it succinctly: “You spend less time thinking about simple problems, and more time thinking about complex ones.”

Maintaining Flow: Maintaining deep concentration—often called the “flow state”—is notoriously difficult in software development. LLMs reduce disruptive context-switching by handling simple lookups or syntax questions instantly within the IDE. As one engineer noted, the difference is striking: “In the past, searching online for the answer took a lot of time. ChatGPT gets me back on track immediately.”

Supporting Continuous Learning: Far from replacing learning, many developers use LLMs as mentors—to explore alternative approaches, understand new languages, or learn domain concepts. One data analyst reflected: “LLMs give me a different perspective. Maybe a more concise way of doing things. I learn from them in that way.”

Creating a Safe Space: For junior developers, LLMs serve as judgment-free sounding boards. They provide instant feedback without fear of “asking dumb questions.” A researcher noted: “You can ask ChatGPT the same thing ten times, and it never judges you—just keeps explaining.”

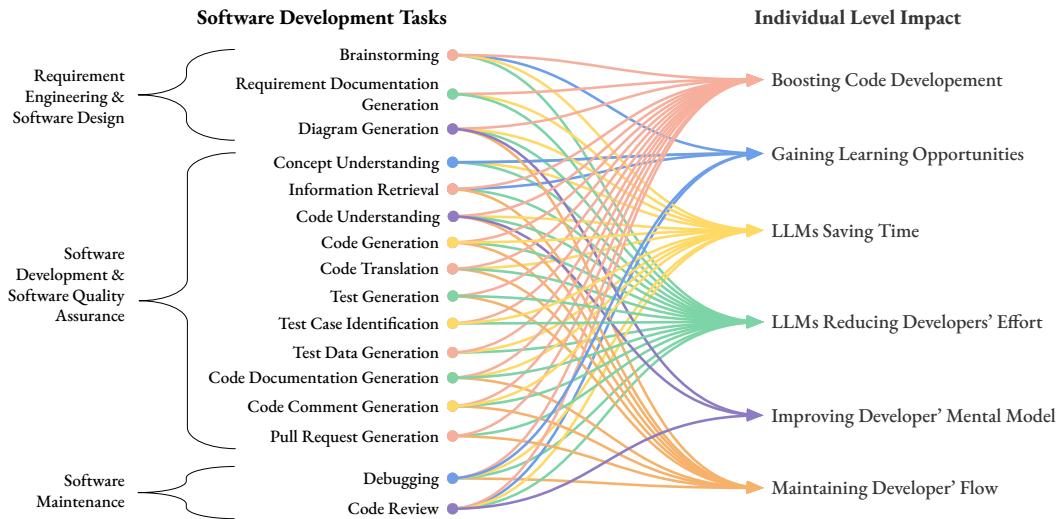

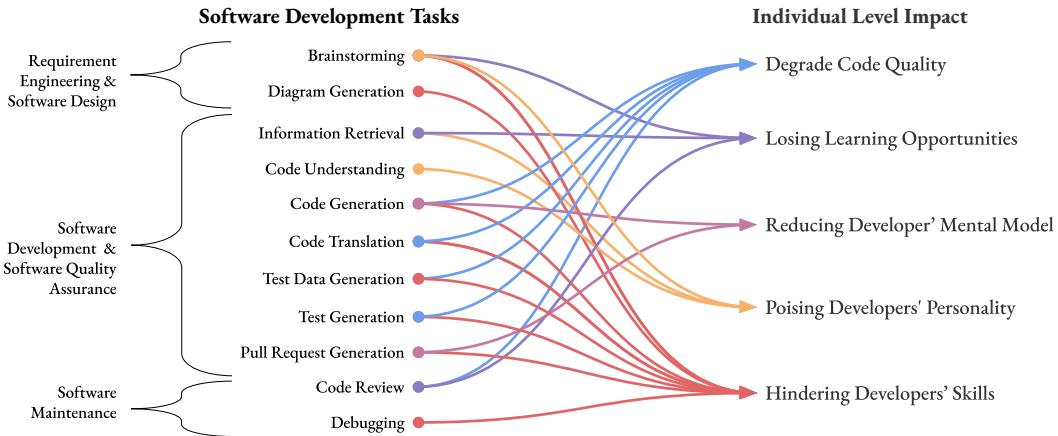

Multiple development tasks connect to core benefits such as productivity, learning, and maintaining flow.

Beyond the Individual

- Team Level: Teams experience fewer interruptions—junior developers get quick answers from AI, leaving seniors focused on their work.

- Organization Level: Faster debugging and automation translate to cost savings, especially during production incidents.

- Society Level: LLMs foster entrepreneurship by enabling rapid prototyping—essentially putting a “virtual co-founder” in the hands of new creators.

The Downside: How LLMs Can Hold Developers Back

Every revolution comes with side effects. The study exposed sharp trade-offs that demand attention.

Individual Level

Slowing Development and Increasing Effort: Ironically, LLMs can cause extra effort when they get “stuck” on wrong suggestions or hallucinate inaccurate answers. Developers often have to restart conversations or carefully validate outputs. One data engineer shared: “Continuing the same chat kept repeating the mistake. I had to start again from scratch.”

Skill Atrophy and Lost Learning: Perhaps the most universal concern was skill erosion. New developers who rely on LLMs for all reasoning never build strong problem-solving foundations. Experienced engineers notice their “coding muscles” weakening: “Sometimes I just forget the basics, because I’m used to autocomplete now,” admitted one participant.

Negative Personality Shifts: Beyond technical skills, developers described growing “lazy” and less curious. One machine learning scientist confessed letting LLMs read documentation for her: “I’ve become lazy—even for small functions I ask the model instead of reading myself.”

Damaged Reputation: Ultimately, bugs and errors from AI-generated code don’t tarnish the AI—they tarnish the human developer. As one participant remarked: “When you push your code to Git, the LLM’s name won’t be there—it’s yours.”

Tasks automated by LLMs can lead to degraded code quality or hindered skill growth if overused.

Team, Organization, and Society Levels

Team Level: The convenience of self-service support can erode mentorship. Juniors increasingly ask LLMs instead of seniors, shrinking collaborative learning moments.

Organization Level: Companies struggle with security, privacy, and licensing issues. Many restrict public LLM usage with proprietary code to prevent leaks or legal traps.

Society Level: Larger ethical concerns emerge—LLMs used for cheating in tech interviews or generating misinformation undermine social trust. Participants also voiced fears about automation reducing lower-skilled developer roles.

Finding Balance: Walking the Tightrope Successfully

With both benefits and risks evident, how can developers strike the right balance? The paper’s third research question (RQ3) offers practical wisdom based on practitioners’ lived experiences.

Be Pragmatic — Know When to Use It: Treat LLMs as tools, not replacements. Use them for syntax, boilerplate, documentation, or debugging—but understand when direct thinking is faster. One developer advised: “If it’s wasting time, just stop using it and do it yourself.”

Balance Time Savings with Learning: Convenience can starve skill growth. Fight the urge to outsource everything; practice active problem-solving before consulting the model. A participant summarized the ideal mindset: “It saves me time, but it also helps me learn.”

Focus on Code Improvement, Not Full Generation: Mature users use LLMs to refine and review—not build entire modules unsupervised. As one developer noted: “I start writing my logic first, then ask Copilot for autocomplete or improvement.”

Mix and Match Tools: Different models excel at different tasks. Developers reported combining ChatGPT for requirements with Copilot for coding, or Claude for explanations. Treat LLMs like a toolbox, not a one-size-fits-all system.

Run Locally When Privacy Matters: Those working with sensitive data increasingly deploy open-source models locally using tools like Ollama or LM Studio to keep proprietary code private.

The Bottom Line: Control Is the Key

LLMs are transforming software development—but transformation doesn’t mean surrendering control. The most effective developers are intentional, pragmatic, and disciplined. They use AI assistants to accelerate simple tasks and enhance understanding while keeping deep reasoning, creative problem-solving, and quality assurance firmly human.

Comparison with related studies — this research adds a broader, multi-level perspective and concrete strategies for balanced adoption.

Ultimately, as the researchers conclude, balanced control is the solution. Gains from LLMs come with trade-offs, and long-term success depends on developers’ ability to manage those trade-offs wisely.

LLMs can boost productivity, creativity, and flow—but only if we remain the architects, not the passengers. The tightrope is real, but with awareness, discipline, and curiosity, developers can walk it confidently—harnessing AI’s promise without losing the skills and intuition that define our craft.

](https://deep-paper.org/en/paper/2511.06428/images/cover.png)